Issue: How to reset Administrator password in DAC10g(Oracle BI Data Warehouse Administration Console)

Solution:

There is no specific algorithm to decrypt DAC Administrator password which stored in W_ETL_USER metadata table of DAC.

Searched many websites to get some solution and gone through the Oracle documents. But unfortunately no where I got the solution to reset the password.

Here one interesting thing, DAC is also having others user accounts with "Developer", "Operator" roles. So I shouldn't mess existing user accounts to just reset the password.

Let us look in to the trick now.

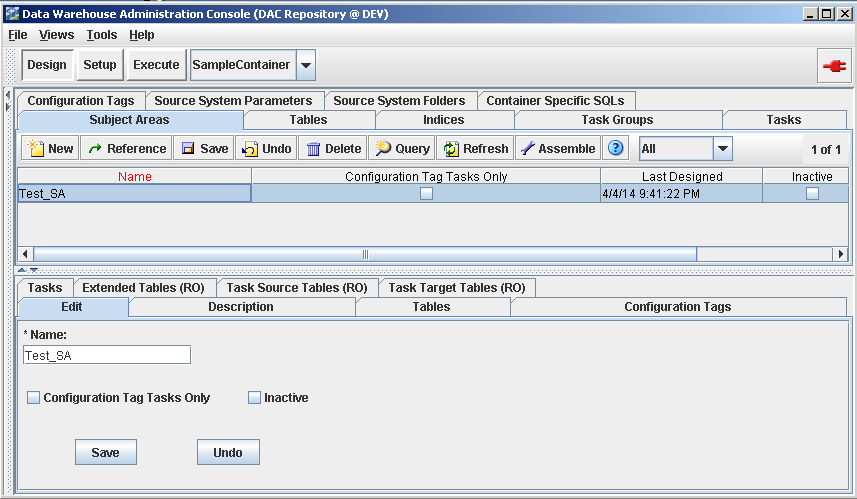

Step 1: Close DAC client.

Step 2: Take the W_ETL_USER table backup.

create table w_etl_user_bkp as select * from w_etl_user;

Step 3: Truncate the table W_ETL_USER.

truncate table w_etl_user;

Step 4: Open DAC client and try to login with below credentials

User name: Administrator

Password: Administrator

Step 5: Now this will ask you to give new Administrator password. Just enter new password.

Step 6: Finally we will insert remaining users and their details from the backup table.

insert into w_etl_user select * from w_etl_user_bkp where username!='Administrator';

commit;

Now you have the new Administrator password along with existing user accounts.

Solution:

There is no specific algorithm to decrypt DAC Administrator password which stored in W_ETL_USER metadata table of DAC.

Searched many websites to get some solution and gone through the Oracle documents. But unfortunately no where I got the solution to reset the password.

Here one interesting thing, DAC is also having others user accounts with "Developer", "Operator" roles. So I shouldn't mess existing user accounts to just reset the password.

Let us look in to the trick now.

Step 1: Close DAC client.

Step 2: Take the W_ETL_USER table backup.

create table w_etl_user_bkp as select * from w_etl_user;

Step 3: Truncate the table W_ETL_USER.

truncate table w_etl_user;

Step 4: Open DAC client and try to login with below credentials

User name: Administrator

Password: Administrator

Step 5: Now this will ask you to give new Administrator password. Just enter new password.

Step 6: Finally we will insert remaining users and their details from the backup table.

insert into w_etl_user select * from w_etl_user_bkp where username!='Administrator';

commit;

Now you have the new Administrator password along with existing user accounts.